For a standard TV user or casual gamer, HDR may be an unfamiliar term because you wouldn’t be using anything that requires HDR if you are a standard or casual consumer. HDR is a feature that is available in high-end displays.

It’s nothing new; HDR has been around for years, especially in the gaming industry. Most new TVs have HDR, especially the high-end ones which include 4K resolution. 4K with HDR is like peanut butter and jelly, a heavenly combo.

The details, extra crispiness, immaculate lifelike lighting, it does make a night and day difference if you compare something without HDR to an HDR supported display.

It wasn’t a trend back in the day, but right now, people are getting familiar with this feature due to the availability of monitors that support HDR.

So is there any point in buying or upgrading your display into an HDR one? Is the sole element of HDR enough to warrant an upgrade? Is HDR worth spending extra money? I will discuss this in detail throughout this article.

What Is HDR Technology? What Does It Do?

HDR, which is an abbreviation for High-Dynamic Range, is not a new term. Its been around for some years, especially in the gaming industry. Game manufacturers have implemented HDR for some time, but it is useless if you don’t own an HDR display. And these displays can be high-end, so it is not unusual that most people are unaware of this term.

But you can definitely see an increase in the usage of HDR because of the availability of new monitors, and also, people are willing to buy high-end Tv displays just so they can enjoy HDR mode entertainment.

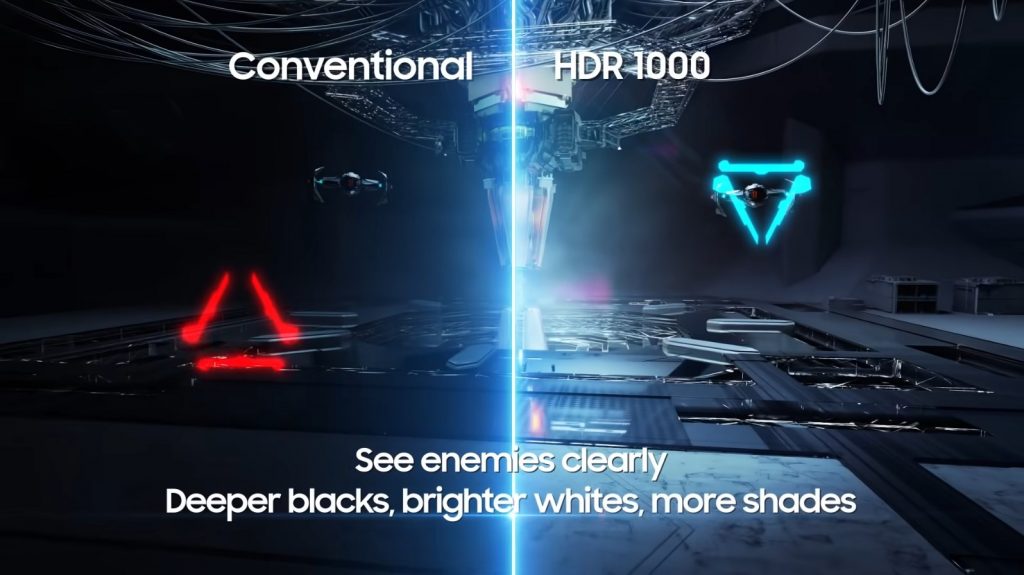

HDR exists only for one purpose. A major purpose is to amplify the image quality by creating realistic lightning, bright, rich colors, and high contrast, which can make your image feel quite lifelike and realistic.

There is a night and day difference if you can compare a non-HDR image with an HDR image side by side. The result can be jaw-dropping. Especially for people who love to watch movies and tv shows in the comfort of their house, a 4K HDR-compatible tv is a must-buy.

A similar read: What is GPU scaling? is it useful?

Even if you talk about games, the difference is huge and unimaginable to the point that you might confuse an in-game render for real-life photography.

Games like Forza Horizon that already implements realistic landscapes, highly detailed environments, and real-life driving physics supports the use of HDR, and I tell you, there is no going back once you have experienced the taste of HDR gaming. A complete out-of-world experience.

#1- Brighter Highlights With More Contrast

The contrast ratio is one of the major properties of a display. What the contrast ratio does is differentiate black and white colors on your screen. The black gets blackest, and the white goes whitest as you tune your contrast ratio setting.

Most displays produce these two major colors, which are then controlled by contrast ratio according to one’s liking. HDR amplifies this feature further and improves it further, creating really crisp and deep shades of black and white.

The more clear and deeper these colors are, the more images become close to lifelike. Especially if you are watching a dark, scary movie with a low light setting, the dark objects on your screen will be quite visible and dominant visually.

In games, this feature can be used competitively to your own advantage. Dim colors get deeper and clearer to see, so if you are looking for your opponent that may be hiding, you can easily spot or have a better visual sight of the dark area or surroundings.

It is definitely a performance-improving feature, and you wouldn’t regret it if you experienced it once, whether in gaming or any other entertainment media.

Related reading: What is anti-aliasing? how does it impact gamers?

#2- Produces Better Images

HDR can bring out the brightest highlights of an image on your display. And it’s not just restricted to still photos, you can enjoy anything on HDR on your display.

Games should have the official support of HDR if you want to play them, but other than that, You can stream, watch movies, browse youtube anything on your HDR display.

HDR creates more depth by highlighting the dim parts of the image and deepening the dark colors, so the image mimics real-life or resembles more like real-life photography. The difference between a standard display and HDR is huge.

Let’s say you are out looking for a new display to buy, and you come across 2 displays that are showcased side by side. One is a normal display you can find anywhere, the other is an HDR display.

You can easily spot the huge gap in quality between those displays, and being a consumer, you will want to get an HDR display because it produces better image quality with lifelike colors.

HDR is a helpful feature for various video editors, Graphic artists, Photographers, and Youtube content creators. HDR helps them capture true and accurate possible colors. Video editors and Photographers will find it easier to color correctly if they are already looking at the right colors. Youtube content creators would want to provide their audience with better quality content.

#3- Striking Black Levels And Shadows

Arguably the major selling point of having an HDR screen. HDR improves black levels on your screen vastly. High detailed shadows and deeper black colors make your image visually striking, and for the first-time user, you will appreciate the colors and dynamic range of shades in HDR more as compared to a standard SDR screen.

SDRs are notorious for having pale and washed-off colors, even a color like black might look bleached or feeble. Shadows are more visible in detail, and you can see movement in darker areas.

This is a helpful feature for gamers that play competitive or battle royales where camping and waiting for your opponent is a common act. Shadows will give you the advantage of seeing clearly.

HDR makes sure that brighter areas do not outshine darker areas, and it feels like HDR is making both dark and bright spots distinctive. Your bright colors are saturated, and dark colors stand out, making the overall image livelier.

This is something SDR is not capable of. HDR simultaneously keeps the balance between dark and bright colors so that in places where bright colors are dominant, dark colors do not wash out or feel inferior.

#4- Colour Space And Color Gamut – Brings More Colors

Everyone likes bright, distinctive colors. HDR uses what we call a color gamut to display colors on screen. Color gamut is a subset of colors that HDR chooses from and then displays on your TV.

Color Gamut has a wide range of colors that is supported by HDR displays, and what makes it even better is Color Spacing. As the name suggests, Color spacing spreads out the colors on your display so that what you are seeing on screen has a more defined look and colors.

This is measured in bits, so a million bits can ramp up the image quality by an enormous gap. With access to a wide variety of colors, HDR improves and delivers the best image quality possible.

It utilizes color and balances darker shades to give a clear image despite having a wide color gamut. SDR maintains brighter image quality by utilizing increased HUE, which does the work, but the image quality gets comparatively worse and bleached.

HDR always manages image quality by avoiding overexposure, and shadowy areas are kept detailed throughout even though the rest of the color palette is brighter.

Types of HDR: Are There Different HDR Formats?

HDR is categorized into 5 different formats, which are supported by different manufacturers. The base version is still the HDR which is a standard you see every day in various streaming apps such as Netflix, amazon prime, youtube, and the list goes on and on.

If we bare them down to their basics, all different types of HDR do the same job, improve visual quality, adjusting color and contrast depth. The main difference is how each of them uses metadata.

Whether it’s dynamic or static, each HDR type has its requirement for adjusting the quality of an image or a scene by using metadata. Metadata is the data that transfer a standard video quality into an HDR video.

Dynamic metadata is more flexible, and it can adjust to the brightness of a scene while static data stays the same. Consistent quality results, but as the scene changes, there may be some detail that might get lost according to the brightness and dark nature of the scene or image.

HDR10 vs HDR10+ vs HLG (Hybrid Log-Gamma) vs Dolby Vision vs Technicolor

HDR10 is what is also known as the base version that is currently available and supported by every media app. It is no doubt a better version of SDR but being a vanilla variant of HDR; it lacks in image quality where its superior versions don’t.

HDR10 being free, is supported by every manufacturer, and it is used in nearly all devices, apps, and streaming websites; you can say it’s a universal format.

HDR10 has one big flaw that makes it inferior to the rest of the types. It supports static metadata, which I have already explained above. Static metadata boasts a single look throughout the entirety of movies, tv-show, and games.

This is, of course, better than SDR as it is quite bright and more detailed in contrast but having a single look throughout, doesn’t do justice to any of the brighter or darker scenes. There is no adjustment being done to amplify the quality of lightning.

More reading: Intel Arc GPU (everything you need to know!)

HDR10+ is a better version of HDR, and it is also known as Samsung format, technically this is correct. Samsung has put all of its support behind this format, with other companies and brands coming forth to adapt this format because of no licensing fees.

Some are hesitant as they don’t want to use a format supported by another manufacturer as it might hurt their image or ego. I don’t know, but the good thing about HDR10+ is that it is significantly better than its vanilla version because of the edition of Plus.

Plus, here means that HDR10+ has upgraded from Static metadata to Dynamic Metadata. Now, if you watch any movie or show on an HDR10+ display, you will notice better lightning and contrast throughout no matter how dark or bright the scene is because HDR10+ will constantly be adjusting its settings following what is being displayed.

DolbyVision sounds familiar, and there is a chance that you might have heard this before. It is also widely available and supported by many streaming multimedia companies, like Amazon and Netflix, and you can also find DV support in ULTRA HD Bluray.

Dolby Vision supports dynamic metadata, and it is one of the best-looking HDR formats out there. However, it is not free to use, and there is a licensing fee that needs to be paid.

This could be a reason why Dolby Vision has less content than all other formats. Not many companies prefer this, especially the industry giants; they would rather prefer to introduce their version of HDR.

HLG (Hybrid Logo Gamma) was introduced by well-known news channels BBC and NHK from Japan, this was a solution for broadcasting HDR content on national television.

HLG is still widely used in Japan as compared to BBC, but BBC is picking up some pace, and although they haven’t aired anything on news television, they did release some content on their streaming service, and you can stream it in 4K HDR.

Technicolour is somewhat unique, it was developed by Philips, and the purpose of technicolor HDR is to upscale any SDR content into HDR so it is compatible on HDR devices, and you can also view HDR movies tv shows on non-HDR displays. So technicolor allows backward compatibility for HDR content.

Is DisplayHDR 400, 500, Or 600 Worth It?

Luminance, or in standard words, brightness, is a huge factor when deciding what display to buy. Of course, no one would love to spend their money on a dim display. HDR displays thankfully have no issues in this scenario.

They are always brighter than SDR displays, but there are categories and certifications that further break down HDR displays according to their level of brightness.

The term nits are used to measure brightness level in displays, so VESA, which is a group of electronic professionals that work in this industry and set standards and regulations, issue certifications. Every HDR display will have this certification mentioned in its specifications that will tell you about the brightness level it can display.

The most common ones are DisplayHDR 400, 500, and 600. DisplayHDR 400 is the minimum limit for a display to be certified as HDR. That means the display has to show 400cd/m2 brightness to be qualified as an HDR display.

It is no brainer that DisplayHDR 400 is the basic version of the HDR screen, so it is not going to be exceptional because there are still DisplayHDR 500 and above ratings that show better brightness.

If we talk about whether it is worth buying a DisplayHDR ranging from 400 to 600 nits, then the answer is quite straightforward. You would at least have to consider a 400 nits display if you are looking for an HDR screen because that’s the standard requirement for an HDR display.

So what about the 500 and 600 nits screens?

It’s all about personal preference. Higher nits will show better image quality, and this will affect you the most if you are a gamer. Better brightness is a major plus point when it comes to games and scenes that include dark environments.

So if you are a gamer or Laptop/Pc user, there is no need for you to go above 600 or even 500 nits display. It is worth it for the result that it gives, and it will enhance your gaming/viewing experience noticeably

More reading: Is ray tracing worth it in 2023?

What Do You Need For HDR? How To Turn On HDR?

The answer to this question can be different depending on consumer preferences. Suppose you are a gamer or a PC/Laptop user, your requirements would be different for HDR.

First of all, you need a GPU that has built-in support for HDR. You can easily find HDR gaming monitors nowadays, so HDR in gaming is becoming quite popular in comparison to a few years ago.

You can check out the list of Nvidia and AMD GPUs that support HDR, don’t worry they are not expensive unless you are looking forward to playing 4K HDR or even for streaming purposes.

Windows 10 has built-in support for HDR, so all you need to do is enable it. And if you have the correct hardware, you will be good to go.

Go to your windows settings which you access from your start menu. Navigate to the display section and look for the windows HD color setting. This setting will allow you to enable support for HDR. You can also manage your displays if you are using multiple monitors.

#1- HDR-Compatible Graphics Cards

Nvidia’s newer series like GTX 10xx and 16xx GPUs have built-in support for HDR, and they come ready to be installed and used. Just plug and play.

That’s because newer gen cards have support for Displayport, which was introduced with DisplayPort 1.4. Older GTX 9xx series have older Displayport 1.2, so for HDR, you have to plug them with HDMI 2.0 connectors.

Any GPU below GTX 10xx series is limited to HDMI 2.0, while the GTX 10xx series comes with DisplayPort 1.4, GTX 16xx series, and RTX series have built-in output support for HDMI 2.0 and DisplayPort 1.4a.

AMD, on the other hand, has a similar issue, but their low-end budget-friendly cards have support for DisplayPort 1.4 and HDMI 2.0. It’s the older R9 series that is stuck with HDMI 1.4b and DisplayPort 1.2.

Anything above RX 460 is compatible with HDMI 2.0 and Displayport 1.4a. For G-sync HDR and FreeSync HDR mode, you have to upgrade to at least GTX 10xx series/RX 4xx series to fully experience G-sync/Freesync with HDR mode. You should be good to go if you own any of these cards, but older GPU owners can still enjoy Vanilla HDR on their GPUs.

#2- Display Cables

When you buy an HDR display, you would expect the best output from it. And you should be, you are paying for HDR because you want better image quality.

You have to make sure you are using a compatible cable for your HDR display. HDR isn’t something new, and the support for DisplayPort and HDMI were introduced back in 2015, so there is a chance you are already using, or you already own a cable.

You can use any HDMI cable for casual browsing and watching movies, but if you are a gamer that loves to play games on high frame rates, you would want to consider an ultra-speed cable. HDMI 2.1 is a perfect choice for your HDR display, especially if it’s an HDR10+ or any other type with dynamic metadata.

For Displayport, there isn’t anything different or complex; you can just plug in and enjoy your gaming. DisplayPort does support high frame rates and HDR up to 120/FPS @ 4K resolution, so DisplayPort is a better and hassle-free choice for gamers

How To Choose The Best HDR Monitor?

It all comes down to the personal preference of the consumer. Whether you are a gamer, professional graphic designer/video editor, or just a casual moviegoer who loves to watch tons of tv shows movies, there is no single answer.

For video editors and graphic designers, they would want the maximum image quality that display can provide.

They would aim for lifelike visuals because, of course, it would make their work easier. There are number of factors that could influence your decision when buying an HDR monitor.

Let’s go through them one by one.

#1- Color Depth

HDR is becoming popular lately, the reason is that HDR monitors are vetter in every way when it comes to displaying accurate, bright colors. HDR monitors have a wide color gamut which allows them to precisely tune colors and contrast on your display.

Dynamic metadata HDR displays are excellent. Color encoding is measured by bits, so if you are going for an HDR display, you should be looking for these specs.

Most HDR panels provide 8+2 bits of color depth which is an 8-bit depth panel with 2 bits worth of headroom to maximize color count that of 10-bit depth.

It is not on par with true 10-bit depth displays when it comes to quality but keeps in mind those 10-bit depth displays cost twice as much as 8+2 bit monitors.

As compared to normal SDR displays, 8+2 bit depth displays provide better saturation and contrast because of a higher number of shades. 8-bit color depth has 16.7 million colors as compared to SDR 262,144 colors.

#2- HDR Backlight Dimming

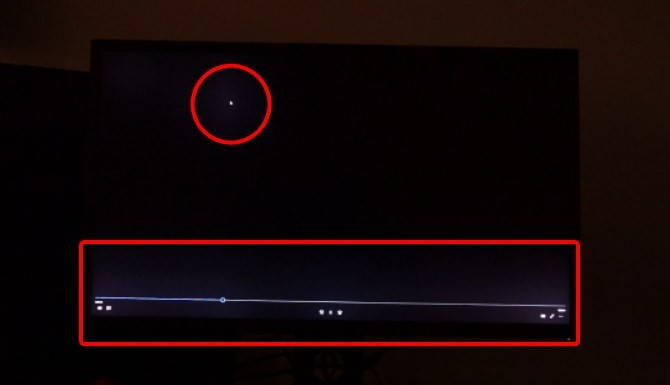

HDR backlight dimming or local dimming is used to deepen the dark colors on your screen for a better and more crisp look. What happens is that HDR backlight dimming controls the contrast ratio as your screen goes dark, so the black colors feel darker, making them look more natural and realistic.

Bright colors on your screen become more visible and clear to look at, improving the overall quality of the scene. This feature isn’t perfect or flawless by all means; as deeper the dark colors get, they can lose detail, especially if there is an object appearing in the same scene.

Bright objects will cause blooming with darker backgrounds. Blooming occurs when bright light bleeds over the dark background.

However, this feature is available in high-end TVs mostly while lower-end TVs do offer this feature, it is not as effective as it seems on paper.

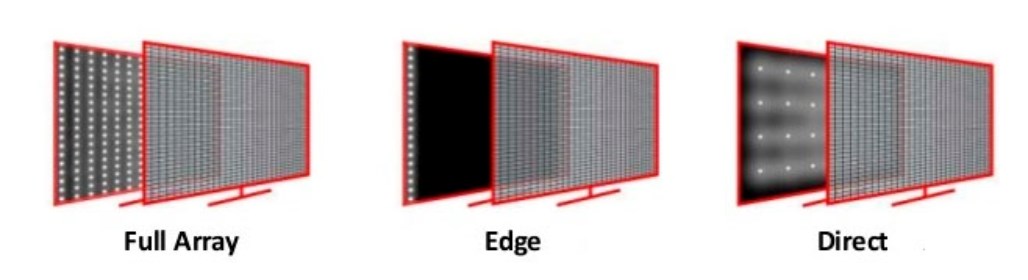

#3- Full-Array Local Dimming (FALD)

FALD or Full-array local dimming is different than Local backlight dimming for the most part. Local dimming displays have one single panel behind the screen that controls your backlight for the most part.

When the backlight is supposed to be dim, the whole image gets darker, but this is not the case when it comes to FALD displays. FALD displays consist of multiple backlights that are divided into multiple little boxes that light up individually.

This allows the display to control the brightness on various parts of the screen, it’s not just limited to the whole single panel. It is, however, an expensive feature that comes in premium TV screens and monitors, and it is found in gaming monitors.

#4- Edge-Lit Dimming

Edge-lit dimming makes the most use of LED lights while illuminating the whole display. The display uses LED lights to cover up the display from all corners.

The lights are placed on each side of the screen from bottom to top. The light then shines on the plastic sheet, which is semi-transparent that helps spread the light all across the display.

This is the most cost-effective method as it requires less use of lights while getting the job done. It is also commonly used in most LEDs today because of its cheap nature.

However, if you compare this to other LEDs that use different technologies as their source of backlight, the overall lightning and effect seem quite pale, and you can notice some colors feel muted overall.

This is because the backlight is not shining on the display itself but rather on a plastic sheet which then illuminates to provide the display. It’s cheaper than most displays out there, so there is a plus point. You can go for this LEDs display if you don’t want a serious dent in your wallet

#5- Global Dimming

This is the most simplistic type of dimming, and I think the name speaks for itself. The whole panel is treated as a single screen. If there is a bright scene, then your screen will raise its brightness, and if there is a dark scene, the display gets darker.

This is the most common and cheapest method of dimming, and it is commonly used in standard LCD LEDs. It works best for scenes with low colors and casual sceneries. You can find this mostly on Laptops because of its low power consumption; it saves a lot of battery life for Laptops.

Global dimming works better when there is a dark scene or when the show is ending because then the screen turns off, giving the impression that the darker colors are very rich and full of depth, but as soon as the something bright in the scene appears, the screens turn on automatically, showing you the standard and cheap colors. So for the standard user, this wouldn’t hurt, but entertainment lovers should avoid this

#6- Peak Brightness

This is one of the major factors and driving forces that can influence your decision during the purchase of LED. You would want a better image quality if you are ready to pay for it. HDR displays produce better image quality, but if it’s HDR, that doesn’t mean it’s the best thing ever.

Some types of certifications can differentiate image quality between panels. Brightness is measured through nits, the minimal requirement for a panel to be recognized as HDR is 400 nits.

This is a standard for most HDR panels, but as you go up, you will see improvement in image quality, contrast ratio, and brightness control.

600 nits certified panels perform the best while this value does go up to 1000; for a standard user, 600 nits get the job done, for huge 4K Television sets, you will notice some panels going up to 1000 to even 2000 which sounds expensive and probably is expensive.

#7- VESA Display Standards

VESA (Video Electronics Standard Association) consists of a group of video electronic professionals that monitors and sets some standards regarding all things electronic.

For example, for a monitor to be certified as HDR, it has to produce a minimum of 400 nits of brightness which is a standard set by VESA. Any LED under 400 nits will not be considered an HDR display.

If you are buying a monitor, you should be careful because, in some cases, you will see HDR 400 written on the display box. This product is not certified by VESA because the VESA certified displays are always certified under the DisplayHDR term.

So instead of HDR400, you should be looking for DisplayHDR400 as well as the official DisplayHDR logo. They also have strict regulations for mounting a display on the wall.

According to their standards, a display screen should have 4 holes at the back, which you can attach to the monitor mount.

#8- OLED

OLED isn’t out of the question when you visit the local market and see tons of options, but HDR LED is still a little better in almost every way.

The first rule of checking the display is how well it produces black levels. This is an important obligation for a display to be good, achieving rich and deep dark colors.

In that cases, OLEDs reign supreme. OLEDs, however, do suffer in the brightness category. They are not as efficient and good as LEDs. LED displays to have powerful LED backlights that can illuminate the whole screen.

There is no doubt that LEDs produce brighter displays than OLED. OLED normally works at 600 nits at best, but LEDs can go beyond 600 even up to 2000 nits, so you can expect better image quality, contrast ratio, and color depth here.

OLED is suitable for use in dark rooms, kind of a small home theatre setup to relax and enjoy movies, but LEDs can go a long way, and they can perform in various situations. Mostly at a professional level, you’d want an LED to display for color grading, video editing, or gaming in general.

#9- Resolution

This is one of those things you should keep in mind while you are out on the hunt for an HDR display. It depends on your choice; what do you want to do with your display? What is the purpose?

If you are a gamer and you are looking forward to HDR mode gaming, you can easily go for either 1080p or 1440p resolution, according to your system requirements of the course.

Most people tend to confuse 4K and HDR as two separate things, and they can put these two against each other when in reality, it’s useless to do so. You can easily get displays that include both features.

If you want to set up a home theatre system and you are looking for 60 inch TV, then you can safely go for a 4K resolution HDR display. If you want something compact, let’s say for gaming or video editing/work purposes, then a smaller screen will be sufficient; the resolution is 1080p. It’s all about your preference and priorities.

Which Panel Is Best For HDR?

Most modern monitors use 3 types of panels. IPS (in panel switching ), TN (twisted nematic), and VA (vertical alignment). These panels differ in quality and mostly target different consumer bases. The IPS panel has the upper hand in terms of quality and color contrast.

The image quality is accurate and consistent while you have perfect viewing angles. You can look from any side or any angle, and you can see the picture quality clearly.

IPS is competing with TN panels in terms of the competitive response time of 1ms while giving the best picture quality you can get. IPS also supports HDR so for gamers and casual users. It is the best choice of the panel to watch HDR content.

TN panels, on the other hand, have poor image quality and a very narrow viewing angle. The image gets dim and loses color if you watch it from a different angle.

You have to sit straight and look at the screen directly, these Panels are mostly targeted towards gamers because they offer a high refresh rate with competitive response time but at a cheaper cost. As long as you sit straight and look at the screen at a decent height, you will be fine.

VA panels stand in the middle ground between the other two panels. The image is not as rich as you think, but VA panels have the upper hand in contrast ratio. IPS and TN panels can go up to 1000:1 on average, but VA panels can have a contrast ratio between 2500:1 to 3000:1.

They can maintain a perfect balance between dark and brightest images while producing dark and rich deep black colors. Response time is 4ms which is below average for gamers, and you might notice some motion blur or image trailing in fast-paced video games.

All 3 types have their own strengths and weaknesses, IPS may be the perfect choice for HDR panel. Just be aware that IPS panels can be pricier than TN and VA Panels

Halo Effect Explained

This is a common issue in HDR screens, and it occurs quite often. The reason why it happens is simple, when there are objects that are bright, and they meet dark areas or interact with low light objects, you can see high contrast around those bright objects.

It would appear as if some light is bleeding from those objects into the darker areas, which ultimately creates an unpleasant look.

For example, you captured beautiful tall coconut trees with a clear blue sky in the background. In HDR mode, you will notice some bright cloudy effect around the leaves if they are touching the blue sky in the background.

This is mostly the result of post-processing in Photoshop or any other software. Shades of leafe and the natural color of blu sky quite don’t match, which can result in an imbalanced and surreal-looking image.

The Halo effect is normal in LED-backlit displays because the whole display is being illuminated by LED lights, some light can escape and bleed on the screen, causing this excessive blooming effect.

You can do very little to avoid this blooming effect on displays. You can either opt for a display that has no blooming, or you can adjust your display settings by decreasing the backlights a bit just so it could balance with darker shades a little more.

Is HDR Better Than 4K?

4K is a display resolution that can increase the quality of your picture, making it sharper and clear. HDR mode is a completely different thing, whereas 4K means the number of pixels available on the screen, while HDR controls and maintains the contrast ratio, brightness, and image quality.

Both belong to a completely different category, and it is unwise to compare these against each other. You don’t have to put them against each other when modern premium TVs have both of them. You can buy yourself a premium 4K HDR TV, which includes both features so you can enjoy the best of both worlds.

However, if you have a choice of either buying a 4K display or an HDR 1080p display, both have their own good features that are incomparable, but 4K, in general, creates better image quality and sharp details. The definition of the 4K image is unbeatable.

What About The HDR & G-Sync?

Screen tearing is dreadful and a nightmare for a gamer. G-Sync was introduced by Nvidia to counter issues lag, frame stutter, input lag, and screen tearing.

To this date, G-Sync has become standard for most gamers, and it is widely available for purchase. HDR and G-Sync both work in a way that elevates user experience and makes the image quality better and more pleasing to look at.

It is useless to compare these two standards against each other; the reason is that Nvidia has introduced G-sync ultimate panel, which incorporates both G-sync and HDR. You can have both features in one display without having to choose.

G-Sync ultimate delivers 144hz of refresh rate along with 1000 nits brightness and 95percent DCI-P3 color gamut. G-sync ultimate monitors are not standard these days, so you might have a problem finding one at a good price. These monitors are quite expensive, and only monitors are certified.

What Is Mobile HDR Premium?

After years of success in Television displays, HDR was finally introduced in mobile, and since 2017, most flagship mobile phones are using HDR displays.

It’s like having a similar experience you get on HDR TV but on a smaller screen with the added benefit of portability. Most people will be skeptical about this because it’s a smaller screen as compared to big HDR TVs.

There is no way that quality will match those large displays, and to some extent, it’s true. The experience of watching a movie on HDR TV is different, but that doesn’t mean mobile HDR is below standard.

It’s all about the display that can provide an accurate and rich color palette, impeccable brightness, and the ability to maintain a perfect contrast ratio.

Mobile HDR has a couple of standards, with the most commonly used in HDR 10, which is cost-effective and widely available in most mobile phones; maybe in the future, we might see the emergence of HDR in low to mid-end mobile phones

Should You Buy A Phone For HDR?

Nowadays, streaming apps are widely popular, and people can’t seem to live without them; Netflix, Amazon, video, Itunes, and even Youtube is now offering HDR content for viewers to consume. Not to mention Disney Plus is also expanding its content into HDR.

There is no doubt that there is a lot of HDR content available for mobile phones, but you have to consider some limitations.

HDR content is high-quality data that may consume your battery, and in the end, you will be left with few hours of battery time. You can invest in a battery bank if you ever run out of battery outside.

But it is still a good choice for someone who wants to watch HDR on the go, without having restrictions in your house in front of the TV.

Or if you travel a lot and you might not want to miss on your favorite TV shows. An HDR display is still going to be better than standard mobile displays, and with the added bonus of the best brightness quality, you won’t have issues using your mobile in sunlight.

Are There Any Downsides Of HDR Monitors?

HDR monitors are superior in terms of quality and the range of color palettes they offer. No doubt, but there are some downsides of HDR monitors that one should consider before making a decision.

Let’s say you are a gamer and you want lifelike, rich colors with perfect contrast balance in the game, you would want an HDR monitor, but most games nowadays don’t support HDR; only a few allow HDR support in their settings.

HDR displays are usually powered by a powerful LED backlight that can ruin your gaming experience in some cases. In bright scenes, HDR can amplify the brightness to the point that it causes blooming. Most scenes can still feel washed out or pale if the game is not utilizing HDR correctly.

The other major downside is the price, which affects both gamers and regular users. HDr displays are expensive for budgeted users. Some displays even cost more than the price of your whole computer.

You can invest in one display if you are looking forward to the future as more games start implementing HDR.. entry-level HDR monitors are cheaper, but they don’t provide the same benefit and quality that more premium HDR TVs do.

HDR In PC Gaming And Console Gaming: The Input Lag

HDR is nothing new when it comes to televisions, and now gaming monitors are adapting to this standard. You can easily look for an HDR gaming monitor, and you will get one without any hassle.

PC and consoles both get the advantage of HDR during gaming. Consoles are usually paired with TVs, and PC owners prefer high refresh rate monitors. HDR significantly improves the visual quality of your image, but it cannot escape from downsides that come along.

HDR implementation in-game can be quite taxing on your console, especially if the game is not utilizing it to its full potential. HDR-enabled gameplay will require more time to process imagery, and this is where input lag is born.

Your PC setup matters, and if you have high-end gaming hardware, you might not notice input lag that much, but game optimization plays a large role in this category.

Fast-paced gameplay will leave dull results on your screen as HDR takes time to process; it cannot keep up with the action-heavy gameplay, especially in FPS games like CSGO, Valorant, Call of Duty, Destiny 2, etc.; etc. for console gamers, HDR makes more sense because of single-player, cinematic games that compliments with HDR perfectly.

Competitive games, however, are a red zone. You should strictly avoid using HDR as Esports titles because of their fast nature, and HDR can also tank FPS. Competitive gamers are already conscious about Frame Rates.

Even top professional players tenz, S1mple, and Scream uses the lowest setting possible for more frame rates. High FPS = Low response time = Less input lag. HDR defeats the purpose of these games as they focus more on gameplay rather than visuals.

Is HDR Worth The Investment?

The answer to this question is as simple as the question itself. In fact, there is no single answer that justifies this question. Everyone has different priorities that can influence their decision to purchase.

I’d say it’s worth it if you invest in HDR displays. There is no competition for HDR displays when it comes to image quality. Of course, they can be a little bit expensive; that’s why I am using the term investment here.

You are investing in an HDR display for futureproofing. Nowadays, many companies and entertainment media are releasing HDR content, whether it’s games or TV shows.

You will never be at a disadvantage if you spend money on an HDR display. Especially for people who work in the graphics/Entertainment industry, it is a necessary piece of hardware.

If you are a photographer, video editor, or even a VFX artist, you would want accurate and real-life imagery for the best results.

Even for casual users who spend their free time watching movies playing video games, it’s a useful feature. You can look at the list of VESA HDR-certified displays and choose what you think is perfect for you.