Assuming you’ve at any point had a screen tearing issue in a PC game, you realize how infuriating it tends to be. An otherwise flawlessly rendered frame ruined by lag and unsightly parallel lines.

Nvidia and AMD have moved forward to fix the issue while keeping up with frame rates, and the two organizations have depended on various innovations to do it.

This now and again prompts a simple suggestion: use G-Sync, assuming you have an Nvidia GPU. Use FreeSync assuming you have an AMD GPU. Here are a few differences between FreeSync vs G-Sync.

Though, if you have to pick up between displays or GPUs, you might be questioning what the distinctions are and which synchronizing method is ideal for your setup.

Let’s break it down to see whether one is a better fit for you.

Freesync vs G-Sync Explained

Both G-Sync vs FreeSync are intended to improve gaming, minimize input latency, and eliminate screen tearing.

They have diverse approaches to achieving these aims, but what distinguishes them is that the former maintains its practice close to the vest while the latter is openly discussed.

In the monitor’s design, Nvidia’s G-Sync technology operates via an in-built processor.

V-Sync Explained

V-Sync, which stands for vertical synchronization, is a display technology created to assist monitor makers in preventing screen tearing.

This happens when two separate “screens” collide because the display’s refresh rate can’t hold up with the data received by the graphics card. The distortion is evident because it generates a cut or misalignment in the picture.

V-Sync solves these problems by placing a stringent limit on the number of frames per second (FPS) that a program may achieve.

In essence, graphics cards may detect the refresh rates of a device’s monitor(s) and then modify picture processing speeds based on that knowledge.

Despite its ability to eliminate screen tearing, it frequently creates difficulties such as screen “stuttering” and input slowness.

The former is a circumstance where the time between frames changes considerably, resulting in picture choppiness.

Other technologies, such as G-Sync and FreeSync, have evolved since the invention of V-Sync to solve display performance concerns and improve visual aspects such as screen resolution, image colors, and brightness levels.

What Is G-Sync? How Does It Work?

G-Sync is a technology created by NVIDIA that coordinates a user’s screen to a device’s GPUs output, resulting in smoother frame rates, particularly gaming. It was released to the public in 2013.

G-Sync is popular in the electronics industry because display refresh rates are constantly faster than the GPU’s capacity to deliver data. As a result, substantial performance difficulties arise.

V-Sync emerged as a viable option. This software-based function causes your graphic card to keep frames in its buffer till your display is ready to refresh. This fixes the screen tearing issue, but it creates a new one: Input lag.

V-Sync requires your GPU to keep previously produced frames, resulting in a minor delay between what happens in the game and what you see on screen.

For instance, if a GPU can 50 FPS, the display’s refresh rate will be set to 50 Hz. When the FPS goes below 40, the display adapts to 40 Hz.

G-Sync technology typically has an effective range of 30 Hz to the maximum refresh rate of the production. G-Sync technology does this by adjusting the vertical blanking interval of the monitor (VBI).

VBI is the time elapsed when a display completes rendering a current frame and goes on to the next one. When G-Sync is activated, the graphics card detects gaps and delays providing additional information, preventing frame difficulties.

To keep up with technological advances, NVIDIA created G-Sync Ultimate, a newer version of G-Sync. G-Sync has been superseded by this new standard, a more sophisticated form of G-Sync.

Although G-Sync provides excellent performance across the board, its main downside is its high price. To fully utilize native G-Sync technology, users must acquire a G-Sync-enabled display and graphics card.

This two-part equipment requirement reduced the amount of G-Sync devices available to customers. It’s also worth stating that these monitors require a GPU with DisplayPort compatibility.

On the other hand, Nvidia employs a custom card that substitutes the standard scaler board and handles everything within the display, such as decoding picture input, regulating the brightness, and so on.

A G-Sync card has 768MB DDR3 memory (inside the monitor) to store the preceding frame and compare it to the next frame, this is made to decrease input latency.

Nvidia’s driver on the PC can fully manage the display’s proprietary hardware. It modifies the vertical blanking interval, or VBI, which is the period between when a monitor finishes rendering the current frame and the start of the next frame.

When G-Sync is enabled, the display becomes a slave to your computer. The showcase clears the past picture and plans to acknowledge the following casing while the GPU renders the delivered frame into the essential buffer.

As the frame rate goes up and down, the feature provides each frame as directed by your PC. Pictures are frequently repainted at broadly fluctuated spans since the G-Sync board permits different invigorate rates.

What Is FreeSync? How Does It Work?

Tearing happens when your monitor’s refresh rate is slower than the game’s frame rate. Gamers who encounter this issue frequently can quickly degrade the gameplay experience.

There is a technique to sync your refresh rate to your GPU rendering. However, it requires the usage of FreeSync. Although this application may be unfamiliar, utilizing FreeSync should not be difficult.

Here’s how to go about it.

AMD’s graphics cards and APUs support FreeSync, which allows them to change the refresh rate of a connected display.

Overall, the leading cause of screen tearing is a lack of timing. The GPU may produce frames quicker than the display, leading the latter to construct “strips” of various structures.

When the perspective is moved horizontally, the “ripping” artifacts appear. Similarly, if the GPU cannot produce at the display’s refresh rate, you may notice “stuttering.”

When FreeSync is activated, the display vigorously refreshes the screen to match the frame rate of the current game.

If it’s a 60Hz display, it can only display 60 frames per second. If the GPU’s output falls, the display’s refresh rate.

If you’re playing a simple PC game like the first Half-Life, you probably don’t require FreeSync at all. Because high refresh rates help eliminate screen tearing, adaptive sync solutions are usually unnecessary if your GPU continuously delivers high frame rates.

However, suppose you’re playing a modern, graphically intense game at 4K resolution, such as Assassin’s Creed: Odyssey.

In that case, even a powerful gaming PC may only display 40 or 50 frames per second on average, dropping below the monitor’s refresh rate.

In 2015, AMD began supporting this technique as FreeSync through their software suite. It creates a two-way communication channel between the Radeon GPU and off-the-shelf scaler boards placed in approved Adaptive-Sync displays. These boards handle all of the computation and rendering, among other things.

FreeSync displays are often cheaper than G-Sync monitors. G-Sync productions employ a proprietary module rather than an off-the-shelf scaler.

This module controls everything from the refresh rate to the illumination. On the other hand, Nvidia is already compiling a list of FreeSync-class displays that are now PC-compatible with its G-Sync technology.

Despite their reduced price, FreeSync displays provide a wide range of other capabilities to improve your gaming experiences, such as 4K resolutions, fast refresh rates, and HDR.

Here on AMD’s FreeSync website, you can find a list of FreeSync monitors.

Freesync Vs G-Sync: Performance

Both G-Sync vs FreeSync are intended to improve gaming, minimize input latency, and eliminate screen tearing.

They have diverse approaches to achieving these aims, but what distinguishes them is that the former maintains its practice close to the vest while the latter is openly discussed.

In the monitor’s design, Nvidia’s G-Sync technology operates via an in-built processor. FreeSync deals with the revive pace of the presentation using the Adaptive-Sync standard consolidated into the DisplayPort standard, bringing about an exhibition distinction.

If performance and image quality are your top priorities when selecting a monitor, G-Sync and FreeSync devices are available in a wide range of configurations to meet almost every requirement. The fundamental distinction between the two techs is the amount of input delay or tearing.

If you don’t mind tearing and desire reduced input latency, the FreeSync standard is an excellent fit. On the other hand, G-Sync-equipped displays are a better choice if you want smooth motions without tearing and are okay with some input latency.

G-Sync and FreeSync provide excellent quality for ordinary individuals or business professionals. If money isn’t an issue and you require top-tier graphics support, G-Sync is the clear victor.

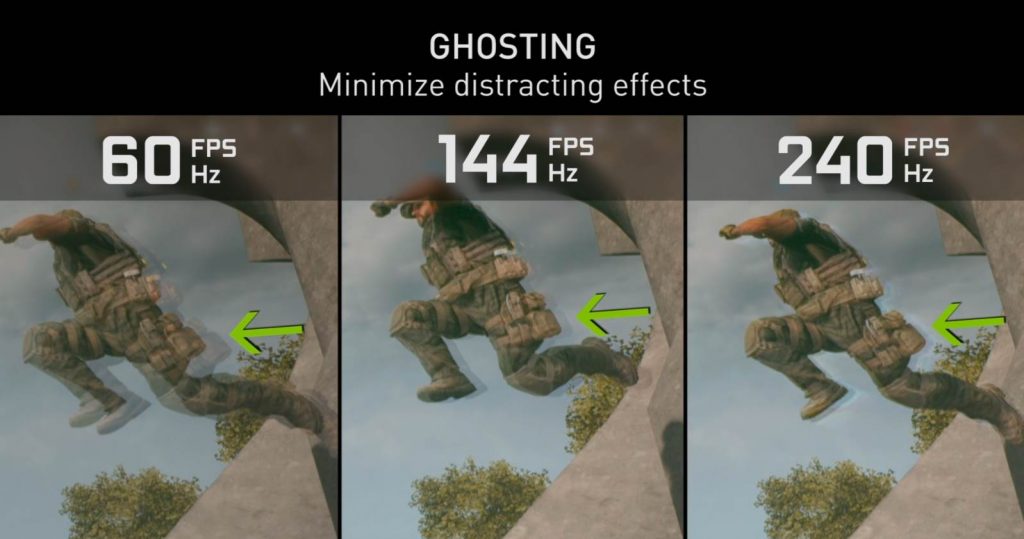

Users report that using FreeSync lowers tearing and stuttering. However, specific displays exhibit another issue: ghosting.

As items travel across the screen, they leave shadowy impressions of their previous positions. It’s an artifact that some people don’t even notice, yet it irritates others.

When the casing rate isn’t consistently synchronizing inside the revive scope of the showcase, both FreeSync and G-Sync endure.

G-Sync can show gleaming issues at meager edge rates, and keeping in mind that the innovation typically adjusts to address it, there are anomalies.

In the meantime, FreeSync experiences faltering when the edge rate falls under a screen’s expressed least invigorate rate.

Some FreeSync screens have a staggeringly little versatile revive range, and assuming your video card isn’t fit for conveying outlines inside that reach, issues create.

Most reviewers who have compared the two appear to prefer G-Sync’s quality, which does not exhibit stuttering at low frame rates and is smoother in real-world scenarios, now if you ask me I also prefer G-Sync over Freesync.

A few Facts:

- Nvidia’s G-Sync and AMD’s Freesync features both have excellent capabilities that can increase your gaming levels.

- When comparing the two, It is believed the G-Sync monitors have a somewhat more excellent feature set, particularly for items certified at the G-Sync Ultimate level.

- The difference between these isn’t so significant that you should never get a Freesync monitor.

- Most G-Sync displays support FreeSync technology. However, they do not support G-Sync proprietary hardware.

- All G-Sync-certified displays support High.

- Color with High Dynamic Range (HDR). The same cannot always be said with FreeSync displays.

- FreeSync is open-source and implemented via DisplayPort and HDMI 1.4, most modern displays can support Adaptive-Sync technology.

- FreeSync monitors are more widespread and less expensive, G-Sync certification has better quality criteria that may appeal to users searching for high-end displays.

Because of this distinction, some customers have reported that FreeSync monitors have a higher propensity to create “ghosting,” which occurs when shadow images of items stay on the screen after being moved or removed.

When FPS drops below the monitor’s least supported refresh rate, both FreeSync and G-Sync experience lag. Consumers tend to detect minor stuttering with G-Sync in most side-by-side comparisons.

Freesync Vs G-Sync: Features

Both are pretty comparable, and you may choose Freesync due to the supported monitors’ reduced cost.

Regardless, the G-Sync certification scheme assures that all compatible displays support LFC (low framerate compensation).

When the variable refresh rate falls below the adaptive sync refresh rate, LFC ensures that it functions.

AMD has developed Freesync 2, a solution intended to address this issue, narrowing the difference between the two manufacturers even further, but more on that later.

It is evident that AMD’s key advantage is the same one that applies to their graphics cards – they are often less expensive and provide more excellent value for money than their Nvidia counterparts.

It is worth noting that many people continue to game without using adaptive sync solutions. This technology is only helpful if nasty screen tearing interferes with your immersion or if you’re playing AAA titles at 4K resolution at maximum settings.

The truth is that once you’ve utilized one of these technologies, it might be challenging to go back, but if you’re on a tight budget, Freesync or none at all makes more sense.

If you are a competitive player, you should emphasize features like a faster refresh rate above Freesync vs G-Sync, which is far more significant in this game genre.

However, it is unusual to have your cake and eat it, and there may be some complications with FreeSync. For example, FreeSync typically allows a fixed framerate “range.”

This implies that FreeSync will only operate within the framerate range given by the manufacturer, which may be 40-75, 30-144, or something else.

This is primarily due to AMD not imposing tight quality control as Nvidia does; therefore, if you’re considering purchasing a FreeSync monitor, I recommend you verify the specified framerate ranges here.

When it comes to G-Sync, Nvidia’s stringent quality control guarantees that the technology is correctly integrated and operates as it should, with no framerate constraints; furthermore, G-Sync goes beyond simple adaptive sync by reducing motion blur, eliminating ghosting, and facilitating display overclocking. Of course, the sheer quality and added features come at a cost, as previously said.

Looking at a variety of G-Sync and FreeSync displays, the primary point is that G-Sync is a known quantity, but FreeSync monitors vary significantly in quality

Some FreeSync monitors are gaming-focused, with high-end capabilities and LFC support, while many aren’t and are better suited to regular office use than gaming.

Potential purchasers will need to do more study on FreeSync displays than on G-Sync counterparts to ensure they acquire a suitable monitor with all the game’s capabilities.

Which Technology Is Better for HDR?

AMD and Nvidia have increased the number of options in a potentially confusing market by releasing new versions of their Adaptive-Sync technology.

This is justified, and appropriately so, by some significant advancements in display technology, especially HDR and expanded color.

According to Nvidia, a monitor can feature G-Sync, HDR, and extended color without gaining the “Ultimate” certification. Nvidia labels displays that can provide what Nvidia calls “lifelike HDR.”

There is also a variant called as FreeSync Premium Pro. It’s the same as G-Sync Ultimate in that it doesn’t add anything to the underlying Adaptive-Sync technology.

Simply said, AMD has approved that display to give a premium experience with at least a 120 Hz refresh rate, LFC, and HDR, according to FreeSync Premium Pro.

If the FreeSync display supports HDR, it will very certainly support G-Sync (whether Nvidia-certified or not).

Another distinction between G-Sync vs FreeSync is low framerate correction (LFC). Every adaptive sync monitor includes a refresh rate window, such as 30 to 144 Hz, within which the refresh rate may dynamically adjust to match the GPU’s render rate.

In my example, the monitor’s support for LFC determines what happens between 0 Hz and the display’s minimum refresh rate – 30 Hz.

Supported Graphics Cards

G-Sync is compatible with ‘Kepler’ GeForce 600 series or newer, while FreeSync is compatible with the ‘Sea Islands’ Radeon RX 200 series or more recent.

The displays may be used regularly with other cards; however, adaptive sync will not operate.

G-Sync

There has been one major limitation with G-Sync monitors for years: you must have an Nvidia graphics card.

Although an Nvidia GPU, such as the new RTX 3080, is still required to utilize G-Sync effectively, more modern G-Sync displays offer HDMI variable refresh rate under the “G-Sync Compatible” label (more on that in the next section).

That implies an AMD card can support a variable refresh rate, but not Nvidia’s complete G-Sync module. Outside of a G-Sync banner display, here’s what you’ll need:

Desktop

Geforce GTX 650ti or later.

Laptop (Built-in and External Displays)

Geforce GTX 965m, GTX 970M, GTX 980M

It took six years, but Nvidia eventually stated in 2019 that it would adopt open standards, allowing AMD Radeon graphics cards to use G-Sync in the future. Hurray! Except with one major caveat.

Support for the requisite HDMI-VRR and Adaptive-Sync via DisplayPort will be supplied solely to new G-Sync modules. Therefore, the majority of existing G-Sync displays will not benefit AMD customers.

FreeSync

Desktop

PC gamers will need a DisplayPort (which also works over USB-C) or HDMI connection and the appropriate Radeon Software graphics driver.

From 2012 (Radeon HD 7000) onwards, any AMD GPUs, including third-party branded ones, as well as any AMD Ryzen-series APU, are supported.

Laptop

FreeSync is incorporated into the display of some laptops with AMD GPUs. They’ll specify this on their spec sheet.

Furthermore, every laptop equipped with an RX 500-series GPU supports external FreeSync displays.

Should I Buy A G-Sync Or FreeSync Gaming Monitor?

When purchasing a new gaming monitor, there is a lot to consider regarding resolution, panel types, and other display technology.

As we approach the Black Friday season, when many gaming displays will be on sale, one of the essential topics you’ll have to answer is picking among these two options.

Before I address that question, let’s go through what these technologies are. Traditional displays that do not natively offer adaptive sync have a fixed refresh rate, which means they will show frames at the same pace regardless of the framerate at which your games are running.

So, if you’re playing a game at 35 frames per second and your monitor refreshes at 60Hz, the panel will frequently display two frames at once, resulting in screen tearing.

Both G-Sync and FreeSync do the same thing: they eliminate stuttering and screen tearing in games. Though they are executed contrarily, they each have pros and cons.

G-Sync monitors use a proprietary Nvidia scaler and only have two inputs: DisplayPort and HDMI, with DisplayPort being the only one that supports adaptive sync.

Compared to comparable FreeSync systems, the scaler is a piece of hardware that manufacturers must install in their displays to allow G-Sync, which elevates their price by an average of $200 (£150).

FreeSync is an open standard designed by AMD that does not require any additional hardware to run on displays. FreeSync displays feature more connectivity options, including older connectors like DVI and VGA, in addition to just being inexpensive than G-Sync rivals.

FreeSync has the bonus of running via HDMI in addition to DisplayPort. However, in my opinion, FreeSync through HDMI does not always work as expected.

LFC prevents this from happening, which is one of the critical benefits of G-Sync over FreeSync, as most FreeSync displays don’t support LFC.

AMD has created FreeSync 2, a new adaptive sync technology, in recognition of the significance of LFC for the best adaptive sync experience.

New displays have just been released that enable the next generation of adaptive sync—FreeSync 2 and G-Sync HDR. FreeSync 2 is not a successor for FreeSync, despite its name; rather, the two will coexist on the market.

Thanks to a certification procedure similar to G- Sync’s, FreeSync 2 displays are assured of featuring LFC, HDR compatibility, and low latency.

Adaptive sync in HDR-enabled games is the key selling point of FreeSync 2 and G-Sync HDR, aside from additional capabilities.

FreeSync Premium & FreeSync Premium Pro

Although the basic FreeSync version was primarily developed for 60Hz, all tiers of FreeSync enable hardware-level adaptive sync.

FreeSync Premium and Premium Pro better support higher framerates. In reality, with full HD 1080p monitors, both upper levels have a minimum of 120Hz.

Only 1080p monitors with refresh rates greater than 120Hz may use FreeSync Premium/Premium Pro.

4K monitors are exempt from this requirement. This is because 4K monitors prioritize resolution and visual fidelity above raw framerate for the time being.

This will change when graphics hardware advances and 8K becomes the typical resolution. Low framerate correction, or LFC, is another feature that both FreeSync Premium and Premium Pro include.

This prevents judder caused by missing frames in the same way as motion enhancement and correction on televisions do. FreeSync technology compensates for drops in refresh rate below the minimum of your monitor’s recognized range.

If the refresh rate of your display swings between 60Hz and 120Hz, Free Sync Premium/Premium Pro will add frames if the refresh rate falls below 60Hz.

Unlike TV and movies, video games do not suffer from the soap opera effect caused by intentionally added structures. Therefore, discomfort or visual damage is incredibly uncommon.

In any case, FreeSync Premium Pro should be the sole option because all tiers of FreeSync remain “free” in principle. AMD does not charge monitor manufacturers for the technology, albeit the additional processor components do.

Though, being an open-source technology, you may as well purchase the maximum tier because the price difference is usually insignificant.

Bottom line: because HDR in games is becoming increasingly important, FreeSync Premium Pro will ultimately become the standard, and you should strive for it.

G-Sync Ultimate & G-Sync Compatible

Inside the display, native G-SYNC productions employ an NVIDIA chip. This was the only way to enable variable refresh rate gaming to operate on your NVIDIA graphics card until the release of “G-SYNC Compatible” displays.

The distinction between a G-SYNC and a G-SYNC Compatible monitor is straightforward. The former is similar to the sensation that G-SYNC was formerly renowned for.

You might expect less input latency and screen tearing on variable refresh rate outputs if you use a G-SYNC monitor.

Then, G-SYNC Compatible displays are any monitors that give what NVIDIA refers to as a ‘Validated Experience.’

If your display’s refresh rate fluctuates between 60 and 120 frames per second, Free Sync Premium/Premium Pro will add frames if the refresh rate goes below 60 frames per second.

Unlike television and movies, video games do not suffer from the soap opera effect created by purposefully inserted edges, making discomfort or visual damage relatively rare.

G-SYNC NVIDIA has tested and approved the display. Thus it’s considered compliant. G-SYNC Compatible displays, like FreeSync displays, use the VESA Adaptive-Sync standard, which has the same limitations as FreeSync displays, such as a VRR range that starts at 40Hz or 48Hz.

If a monitor is not certified by NVIDIA to be G-SYNC Compatible, it may still operate with VRR on an NVIDIA graphics card, but not flawlessly.

The best approach to be sure is to investigate any potential purchases, avoiding disappointment extensively. More information on activating G-SYNC on FreeSync displays may be found here.

Freesync Vs G-Sync: Which One Is Best for You?

If performance and image quality are your top priorities when selecting a monitor, G-Sync vs FreeSync devices is available in a wide range of configurations to meet almost every requirement.

The fundamental distinction between the two standards is the amount of input lag or tearing.

If you don’t mind tearing and desire reduced input latency, the FreeSync standard is an excellent fit.

On the other hand, G-Sync-equipped displays are a better choice if you want smooth motions without tearing and are okay with some input latency.

Because there is no distinction in the technologies, the choice is essentially based on brand preference.

Remember that while any monitor will operate regardless of the graphics card, G-Sync or FreeSync compatibility needs NVIDIA or AMD graphics, respectively.

G-Sync and FreeSync provide excellent quality for ordinary individuals or business professionals. If money isn’t an issue and you require top-tier graphics support, G-Sync is the clear victor.